microservices antipatterns and pitfalls - Are We There Yet Pitfall

With the microservices architecture every service is deployed as a separate application, meaning all of the communication to a microservice from the client or API layer, as well as communication between services, requires a remote call.

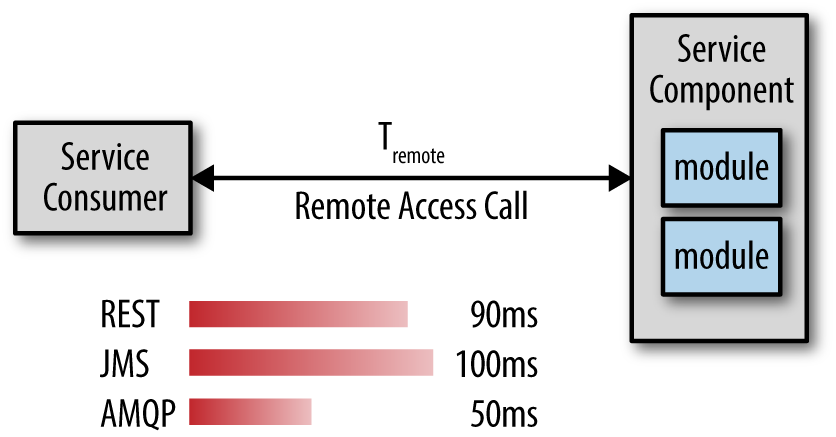

This pitfall occurs when you don’t know how long the remote access call takes. You might assume the latency it around 50 milliseconds, but have you ever measured it? Do you know what the average latency is for your particular environment? Do you know what the “long tail” latency is (e.g., 95, 99, 99.5 percentiles) for your environment? Measuring both of these metrics is important, because even with good average latency, bad long-tail latency can destroy you.

Measuring Latency

Measuring the remote access latency under load in your production environment (or production-like environment) is critical for understanding the performance profile of your application. For example, let’s say a particular business request requires the coordination of four microservices. Assuming that your remote access latency is 100 milliseconds, that particular business request would consume 500 milliseconds just in remote access latency alone (the initial request plus four remote calls between services). That is a half a second of request time without one single line of source code being executed for the actual business request processing. Most applications simply cannot absorb that sort of latency.

You might think the way to avoid this pitfall is to simply measure and determine your average latency for your chosen remote access protocol (e.g., REST). However, that only provides you with one piece of information—the average latency of the particular remote access protocol you are using. The other task is to investigate the comparative latency using other remote access protocols such as Java Message Service (JMS), Advanced Message Queuing Protocol (AMQP), and Microsoft Message Queue (MSMQ).

Comparing Protocols

The comparative latency will vary greatly based on both your environment and the nature of the business request, so it is important to establish these benchmarks on a variety of business requests with different load profiles.

Figure 9-1. Comparing remote access latency

In looking at the hypothetical example in Figure 9-1 you notice that AMQP is in fact almost twice as fast as REST. You can now leverage this information to make intelligent choices as to which requests should use which remote access protocol. For example, you may choose to use REST for all communications from client requests to your microservices and AMQP for all interservice communication in order to increase performance within your application.

Performance is not the only consideration when selecting your remote access protocol. As you will see in Give It a Rest Pitfall, you may want to leverage messaging to provide additional capabilities to your application as well.