video characteristics

Video composition and video compression

Transclude of video_composition_and_video_compression.excalidraw

B/P/I Frames

The concept of I-frames, P-frames, and B-frames is fundamental to the field of video compression. These three frame types are used in specific situations to improve the codec’s compression efficiency, the compressed stream’s video quality, and the resilience of the stream to transmission and storage errors & failures.

Inter / Intra prediction

If I want to compress the next Frame using a modern video codec like H.264 or HEVC, I would do something as follows:

- Break the video into blocks of pixels (macroblocks) and compress them one at a time.

- In order to compress each macroblock, the first step is to find a macroblock similar to the one we want to compress by searching in the current frame or previous or future frames.

- The best-match macroblock’s location is recorded (which frame and its position in that frame). Then, the two macroblocks’ difference is compressed and sent to the decoder along with the location information.

- Encoders search for matching macroblocks to reduce the size of the data that needs to be transmitted. This is done via a process of motion estimation and compensation. This allows the encoder to find the horizontal and vertical displacement of a macroblock in another frame.

- An encoder can search for a matching block within the same frame (Intra Prediction) and adjacent (Inter Prediction) frames.

- It compares the Inter and Intra prediction results for each macroblock and chooses the “best” one. This process is dubbed “Mode Decision,” and in my opinion, it’s the heart of a video codec.

I-frame

An I-frame or a Key-Frame or an Intra-frame consists ONLY of macroblocks that use Intra-prediction. That’s it.

Every macroblock in an I-frame is allowed to refer to other macroblocks only within the same frame. That is, it can only use “spatial redundancies” in the frame for compression. Spatial Redundancy is a term used to refer to similarities between the pixels of a single frame.

An I-frame comes in different avatars in different video codecs as IDR, CRA, or BLA frames, but the essence remains the same for these types of I-frames — no temporal prediction allowed in an I-frame.

P-frame

P-frame stands for Predicted Frame and allows macroblocks to be compressed using temporal prediction in addition to spatial prediction. For motion estimation, P-frames use frames that have been previously encoded. In essence, every macroblock in a P-frame can be:

- temporally predicted, or

- spatially predicted, or

- skipped (i.e, you tell the decoder to copy the co-located block from the previous frame – a “zero” motion vector).

B-frame

A B-frame is a frame that can refer to frames that occur both before and after it. The B stands for Bi-Directional for this reason. If your video codec uses macroblock-based compression (like H.264/AVC does), then each macroblock of a B-frame can be

- predicted using backward prediction (using frames that occur in the future)

- predicted using forward prediction (using frames that occur in the past)

- predicted without inter-prediction – only Intra

- skipped completely (with Intra or Inter prediction).

And because B-frames have the option to refer to and interpolate from two (or more) frames that occur before and after it (in the time dimension), B-frames can be incredibly efficient in reducing the size of the frame while retaining the video quality. They can exploit both spatial and temporal redundancy (future & past frames) making them very useful in video compression.

Although b-frames are quite useful for video compression, they also comes with their drawbacks:

- it’s not possible to cut a video on frame with bitstream copy codec (see https://superuser.com/a/459488 for more information)

- the frames are not in order, so when live streaming (for live, not VOD) a video with b-frames, expect some weird behavior (e.g. huge latency up to 30s for example)

title: ffmpeg command to remove the b-frames from a video

~~~bash

ffmeg -i input.mp4 -bf 0 output.mp4

~~~Frame compression comparison

Sources:

Group Of Pictures (GOP)

A GOP can contain the picture types:

- i-frame

- p-frame

- b-frame

- d-frame (only in MPEG-1 video)

An I frame indicates the beginning of a GOP. Afterwards several P and B frames follow. In older designs, the allowed ordering and referencing structure is relatively constrained.

The GOP structure is often referred by two numbers, for example, M=3, N=12.

The first number tells the distance between two anchor frames (I or P).

The second one tells the distance between two full images (I-frames): it is the GOP size.

For the example M=3, N=12, the GOP structure is IBBPBBPBBPBBI. Instead of the M parameter the maximal count of B-frames between two consecutive anchor frames can be used.

Keyframe interval

The keyframe interval controls how often a keyframe (i-frame) is created in the video. The higher the keyframe interval, generally the more compression that is being applied to the content, although that doesn’t mean a noticeable reduction in quality. For an example of how keyframe intervals work, if your interval is set to every 2 seconds, and your frame rate is 30 frames per second, this would mean roughly every 60 frames a keyframe is produced.

If you want to seek forward or back in a video, you need to have an I-frame at the point of restarting the video. Right?

Think about it, if you seek a P or a B-frame and the decoder has already dumped its reference frames from memory, how are you going to reconstruct them? The video player will naturally seek a starting point (an I-frame) to decode successfully and start playing back from that point onwards.

This brings us to another interesting point.

If you place Key Frames far apart in the video – say every 20 seconds, then your users can seek in increments of 20 seconds only. That’s a terrible user experience!

However, if you place too many key-frames, the seeking experience will be great, but the video’s size will be too big and might result in buffering!

Designing the optimal GOP and mini-GOP structure is truly a balancing art.

The term “keyframe interval” is not universal and most encoders have their own term for this.

Other programs and services might call the interval the “GOP size” or “GOP length”, going back to the “Group of Pictures” abbreviation.title: ffmpeg command to change the keyframe interval of a video

~~~bash

# -g: abide to required 2s keyframe interval, this will set a value of 50 Group Of Pictures

# -keyint_min: minimum distance between I-frames and must be the same as -g value

# -sc_threshold: option to make sure to not add any new keyframe when content of picture changes

ffmpeg -i input.mp4 \

-g 50 \

-keyint_min 50 \

-sc_threshold 0 \

output.mp4

~~~title: ffprobe command to check the frame timestamps

~~~bash

ffprobe input.mp4 \

-select_streams v \

-show_entries frame=coded_picture_number,pkt_pts_time \

-of csv=p=0:nk=1 \

-v 0 \

-pretty \

| less

~~~Sources:

- https://tipsforefficiency.com/obs-keyframe-interval/

- https://blog.video.ibm.com/streaming-video-tips/keyframes-interframe-video-compression/#keyframe

Video Bitrate

Bitrate (or bit rate) — in its simplest term — is how much information your video sends out per second from your device to an online platform.

Technically, bitrate means the quantity of data required for your encoder to transmit video or audio in one single second. Bitrates are measured in:

bps (bit per second)

Kbps (kilobit per second): 1,000 bps

Mbps (megabit per second): 1,000 Kbps

Gbps: gigabit per second: 1,000 Mbps

Tbps (terabit per second): 1,000 Gbps

In livestreaming, Mbps is the most common bitrate unit for videos and Kbps for audio.

Note: Bit per second (bps) should not be confused with byte per second (Bps). For example, Kbps vs. KBps and Mbps vs. MBps.

Bitrate is calculated using this formula:

Frequency x bit depth x channels = bitrate

Types of Bitrates

CBR: Constant Bitrate

This type of bitrate looks at each individual frame in your video, then compresses them at the same effort, regardless of the amount of information in the frame. CBR encoding optimizes media files, saves storage space and prevents hiccup playbacks. It is recommended for streaming videos with constant frames and similar motion levels like news reports, for example.

VBR: Variable Bitrate

Unlike CBR, VBR looks at each individual frame and decides which frame needs a lot or a little compression. The bitrate of every frame will be automatically adjusted by the encoder. This type of bitrate is ideal for dynamic video contents such as music concerts or sports events.

Why is bitrate important?

Bitrates directly affect the file size and quality of your video. That is, how sharp your video looks and how big the file size is when you stream or upload the video. Bitrates also help you determine the internet connection speed you need to be able to watch. It also helps you decide how much it is going to cost for your bandwidth to host the video on your site and deliver to your audience.

Bitrates affect:

- Video and audio file size

- Video and audio quality

- Internet connection speed

- Cost for bandwidth

More bits per second means more information in your video in every second, which means more details on your screen and, ultimately, better video quality.

The same idea applies to audio bitrates too. The higher the bitrate is, the better the sound will turn out.

What to consider when choosing a Bitrate

- resolution (e.g. 720p)

- frame rate (e.g. 30fps)

- video accessibility

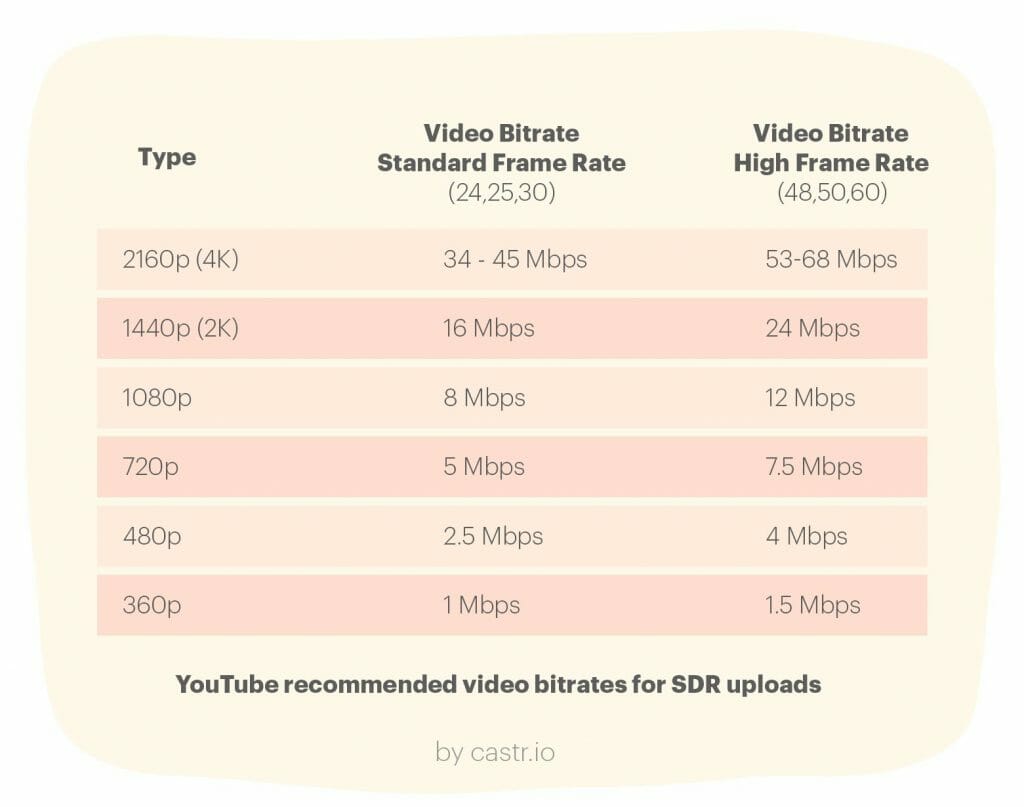

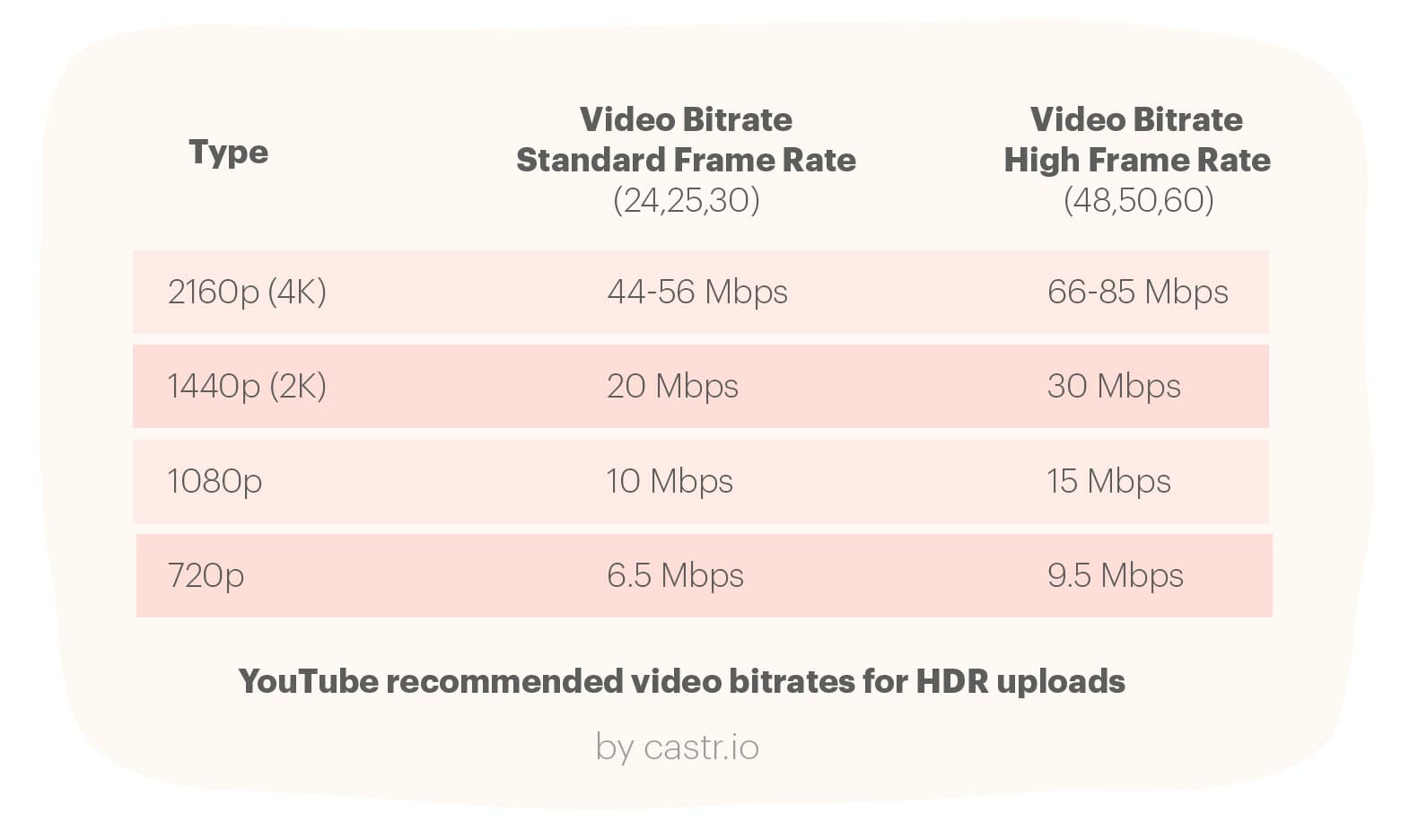

Recommended video bitrates for SDR uploads

https://castr.io/blog/what-is-video-bitrate/

Wowza formula to compute the bitrate

BPP: Bits per Pixel

Bits per pixel (BPP) is the average amount of data applied to each pixel in a video file. It’s calculated by dividing the data rate (kbps) by a video’s pixels per second (width in pixels times height in pixels times frames per second):

data rate / (resolution * frames per second) = BPP

BPP typically ranges from 0.05 to 0.150, depending on the amount of motion in the scene. The more motion there is, the higher the BPP should be.

BPP is also inversely proportional to resolution: The lower the resolution, the higher the BPP can be without generating huge bitrates. That’s because there are fewer total pixels to duplicate, or reference, in a lower resolution video than in a higher resolution video.

Bitrate formula

(resolution * frames per second * BPP) / 1000 = bitrate

E.g.

1920 * 1080 * 25 * 0.150 / 1000 = 7776 kbps

1280 * 720 * 25 * 0.150 / 1000 = 3456 kbps

https://www.wowza.com/docs/how-to-optimize-the-video-bitrate

title: ffmpeg command to change the video bitrate

~~~bash

# produce a file that stays in the 3000-6000 video bitrate range

ffmpeg -i input.mp4 \

-b:v 3000k \

-maxrate 3000k \

-bufsize 6000k \

output.mp4

~~~video transformation

Video encoding: This term describes the process of converting raw video — which is much too large to broadcast or stream in its original state when a camera captures it — into a compressed format that makes it possible to transmit across the internet.

Without video encoding, there would be no streaming. It’s the first step in delivering content to an online audience because it’s what makes video digital.

Codec: Encoding relies on two-part compression technology called codecs. Short for “coder-decoder” or “compressor-decompressor” (we love a good double meaning), codecs use algorithms to discard visual and audio data that won’t affect a video’s appearance to the human eye. Once video files are a more manageable size, it’s feasible to stream them to audiences and decompress them when they reach different devices.

If you’re confused about the distinction between codecs and encoders think of it this way: encoders are hardware (like Teradek) or software (like OBS Studio) that perform the action of encoding by using codecs. H.264/AVC, HVEC, and VP9 are common video codecs you’ll come across (H.264 most of all), and AAC, MP3, and Opus are examples of audio codecs.

Decoding: Decoding is the act of decompressing encoded video data to display or alter it in some way. For example, a human viewer can’t watch an encoded video, so their device must decode the data and reassemble the “packets” in the right order to display it on a screen.

Transcoding: Transcoding is an umbrella term that refers to taking already-encoded content, decoding/decompressing it, implementing alterations, and recompressing it before last-mile delivery. Transcoding video might involve making smaller changes, such as adding watermarks and graphics; or much larger ones, like converting content from one codec to another. Actions like transrating and transizing fall under the definition of transcoding.

Transcoding often falls right in the middle along the camera to end-user pipeline. A potential use case would be applying a protocol like RTMP, RTSP, SRT, or WebRTC for first-mile delivery to a transcoder — which can be either a media server or cloud-based streaming platform — and then transcoding your content into a different codec (or multiple codecs) to make it compatible with the kinds of protocols your audience’s devices support. These include protocols like Apple HLS, MPEG-DASH, HDS, and WebRTC (which is applicable for both first and last mile).

Transmuxing: You’ve also likely heard the term “transmuxing,”which refers to repackaging, rewrapping, or packetizing content. Transmuxing is not to be confused with transcoding because the former involves switching delivery formats while the latter requires more computational power and makes changes to the content itself.