microservices antipatterns and pitfalls - The Timeout AntiPattern

Abstract

In most case, setting a timeout can lead you down a bad path, e.g. timeout the request right when the service has successfully placed the trade. Some mitigations:

- calculate the database timeout within the service and use that as a base for determining what the service timeout should be

- calculate the max time under load and double it (more popular)

However, it’s an anti-pattern as it would cause every request to have to wait for 10s, just to find out the service is not responsive.

Rather than relying on timeout values, use the circuit breaker pattern:

Circuit breakers can monitor the remote service with:

- a simple heartbeat check

- synthetic / fake transaction

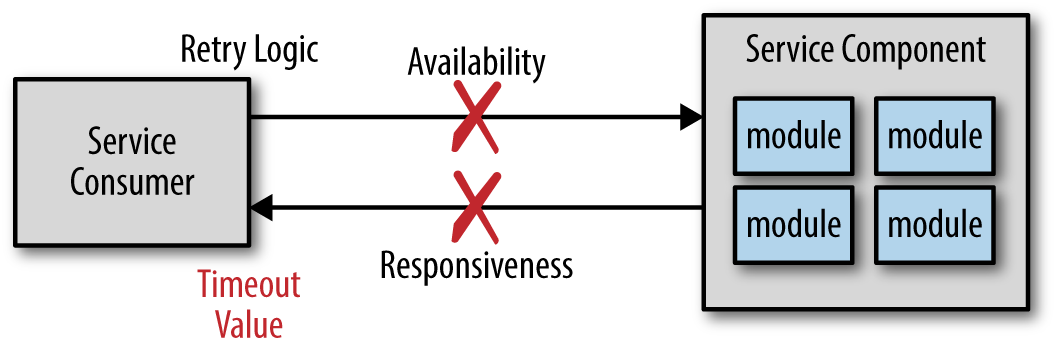

microservice is a distributed architecture, meaning all of the components (i.e., services) are deployed as separate applications and are accessed remotely through some sort of remote access protocol. One of the challenges of any distributed architecture is managing remote process availability and responsiveness. Although service availability and service responsiveness are both related to service communication, they are two very different things. Service availability is the ability of the service consumer to connect with the service and be able to send it a request, as shown in Figure 2-1. Service responsiveness, on the other hand, is the time it takes for the service to respond to a given request once you’ve communicated with it.

Figure 2-1. Service availability vs. responsiveness

If the service consumer cannot connect with or talk to the service (i.e., availability), the service consumer is usually immediately notified within milliseconds, as Figure 2-1 shows. The service consumer may choose to pass this error onto the client or retry the connection several times before giving up and throwing some sort of connection failure. However, assuming the service was reached and a request was made, what happens if the service doesn’t respond? In this case the service consumer can choose to wait indefinitely or leverage some sort of timeout value.

Using a timeout value for service responsiveness seems like a good idea, but can lead you down a bad path known as the timeout anti-pattern.

Using Timeout Values

You might be a bit confused at this point. After all, isn’t setting a timeout value a good thing? Maybe, but in most cases it can lead you down a bad path. Consider the example where you are making a service request to buy 1000 shares of Apple stock (AAPL). The very last thing you want to do as the service consumer is time out the request right when the service has successfully placed the trade and is about to give you a confirmation number. You can try to resubmit the trade, but you have to add significant complexity into your service to determine if this is a new trade or a duplicate trade. Furthermore, since you don’t have a confirmation number from the first trade it is very difficult to know whether the trade was actually successful or not.

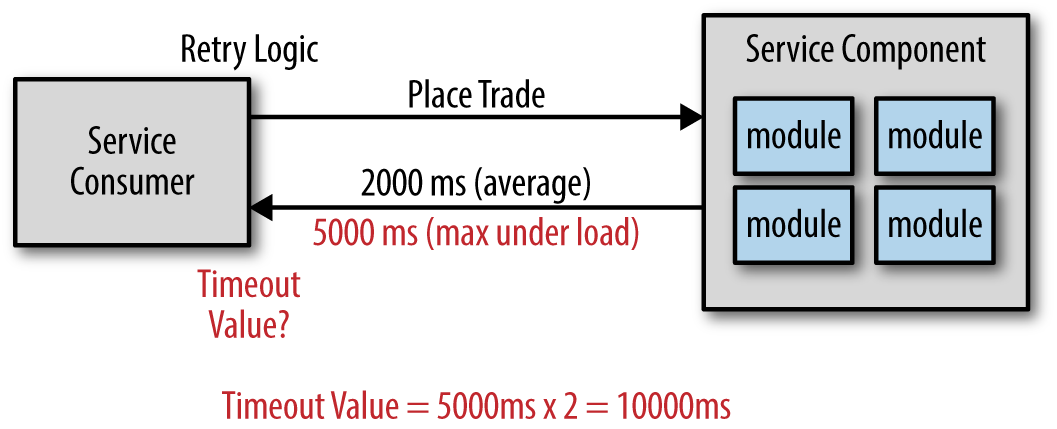

So, given that you don’t want to time out the request too early, what should the timeout value be? There are several techniques to address this problem. The first is to calculate the database timeout within the service and use that as a base for determining what the service timeout should be. The second solution, which is by far the most popular technique, is to calculate the maximum time under load and double it, thereby giving you that extra buffer in the event it sometimes takes longer.

Figure 2-2 illustrates this technique. Notice that on average the service responds within 2 seconds to place a trade. However, under load the maximum time observed is 5 seconds. Therefore, using the doubling technique, the timeout value for the service consumer would be 10 seconds. Again, the intention with this technique is to avoid timing out the request when in fact it was successful and was in the process of sending you back the confirmation number.

Figure 2-2. Calculating a timeout value

It should be clear now why this approach is an antipattern. While this seems like a perfectly logical solution to the timeout problem, it causes every request from service consumers to have to wait 10 seconds just to find out the service is not responsive. Ten seconds is a long time to wait for an error. In most cases users won’t wait more than 2 to 3 seconds before hitting the submit button again or giving up and closing the screen. There must be a better way to deal with server responsiveness.

Using the Circuit Breaker Pattern

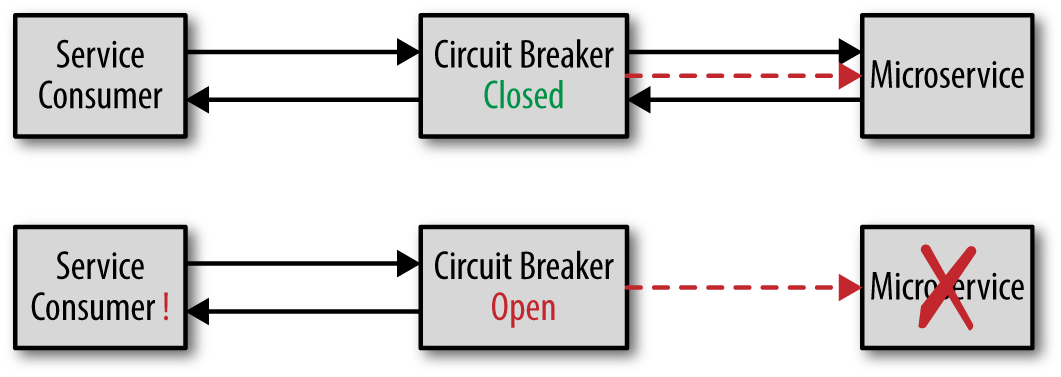

Rather than relying on timeout values for your remote service calls, a better approach is to use something called the circuit breaker pattern. This software pattern works just like a circuit breaker in your house. When it is closed, electricity flows through it, but once it is open, no electricity can pass until the breaker is closed. Similarly, if a software circuit breaker detects that a service is not responding, it will open, rejecting requests to that service. Once the service becomes responsive, the breaker will close, allowing requests through.

Figure 2-3 illustrates how the circuit breaker pattern works. The circuit breaker continually monitors the remote service, ensuring that it is alive and responsive (more on that part later). While the service remains responsive the breaker will be closed, allowing requests through. If the remote service suddenly becomes unresponsive, the circuit breaker opens, thus preventing requests from going through until the service once again becomes

responsive. However, unlike the circuit breaker in your house, a software circuit breaker can continue monitoring the service and close itself once the remote service becomes responsive again.

Figure 2-3. Circuit breaker pattern

Depending on the implementation, the service consumer will always check with the circuit breaker first to see if it is open or closed. This can also be done through an interceptor pattern so the service consumer doesn’t need to know the circuit breaker is in the request path. In either case, the significant advantage of the circuit breaker pattern over timeout values is that the service consumer knows right away that the service has become unresponsive rather than having to wait for the timeout value. In the prior example, if a circuit breaker was used instead of the timeout value, the service consumer would know within milliseconds that the trade-placement service was not responsive rather than having to wait 10 seconds (10,000 milliseconds) to get the same information.

Circuit breakers can monitor the remote service in several ways. The simplest way is to do a simple heartbeat check on the remote service (e.g., ping). While this is relatively easy and inexpensive, all it does is tell the circuit breaker that the remote service is alive, but says nothing as to the responsiveness of the actual service request. To get better information about the responsiveness of the request you can use synthetic transactions. A synthetic transaction is another monitoring technique circuit breakers can use where a fake transaction is periodically sent to the service (e.g., once every 10 seconds). The fake transaction performs all of the functionality required within that service, allowing the circuit breaker to gain an accurate measure of responsiveness. Synthetic transactions can be very tricky and difficult to implement in that all parts of the application or system need to know about the synthetic transaction. A third type of monitoring is real-time user monitoring, where actual production transactions are monitored for responsiveness. Once a threshold is reached, the breaker moves into what is called a half-open state, where only a certain number of transactions are let through (say 1 out of 10). Once the service responsiveness goes back to normal, the breaker is then closed, allowing all transactions through.

There are several open source implementations of the circuit breaker pattern, including Hystrix from Netflix and a plethora of GitHub implementations. The Akka framework includes a circuit breaker implementation as part of the framework implemented through the Akka CircuitBreaker class.

You can get more information about the circuit breaker pattern through the following resources:

- Michael Nygard’s excellent book Release It!

- Martin Fowler’s circuit breaker blog post

- Microsoft MSDN library