video streaming protocols

Sources:

- Streaming protocols and latency

- HLS vs RTMP vs WebRTC

- The ultra-low latency video streaming roadmap: from WebRTC to CMAF

- Streaming protocols comparison

Muxing

Muxing a video means combining multiple audio and video streams into a single file or stream. This is often done for the purpose of distributing or storing audio and video content, as it allows the audio and video to be packaged together in a single file or stream. Muxing can also be used to add additional audio or video streams to a file, such as subtitles or alternate audio tracks.

One common use case for muxing is when creating a video file that will be played on a device or platform that requires a specific container format. For example, a video file might be created by muxing an H.264 video stream and an AAC audio stream into an MP4 container. This allows the video file to be played on a wide range of devices and platforms that support the MP4 format.

Muxing can also be used to create files that are optimized for specific use cases, such as streaming over the internet or playing on a particular device. By muxing audio and video streams together in a specific way, it is possible to create files that are optimized for a particular purpose and that will play back smoothly and efficiently on the intended device or platform.

Demuxing

Demuxing a video means separating the audio and video streams that are multiplexed together in a single file or stream. A multiplexed audio and video stream is one where the audio and video data are combined into a single file or stream, and demuxing is the process of separating them back into their original, separate streams. This can be done using specialized software or tools, and it is often necessary in order to edit or process the audio or video streams individually.

Transmuxing

Transmuxing is the process of converting a multiplexed audio and video stream from one container format to another without re-encoding the audio and video data. In other words, transmuxing involves separating the audio and video streams from the original container, and then multiplexing them back together into a new container using the same audio and video codecs.

Transmuxing is often used to prepare video content for playback on a particular device or platform that requires a specific container format. For example, a video file that is stored in an AVI container might be transmuxed into an MP4 container in order to be played on a device that only supports MP4 files.

One advantage of transmuxing is that it can be done very quickly, since it does not involve re-encoding the audio and video data. This can be especially useful when working with large video files, as re-encoding can be a time-consuming process. However, transmuxing is only possible if the audio and video codecs used in the original file are supported by the target container. If the codecs are not supported, it may be necessary to re-encode the audio and video streams in order to create a file that can be played on the intended device or platform.

Transmuxing vs transcoding

The primary function of transmuxing is to change the container or delivery format of video and audio while preserving the original content. Although transmuxing is similar to transcoding, it is generally considered to be more lightweight and requires less computing power because it does not modify the uncompressed, raw video or audio source.

- https://blog.twitch.tv/en/2017/10/10/live-video-transmuxing-transcoding-f-fmpeg-vs-twitch-transcoder-part-i-489c1c125f28/

- https://blog.twitch.tv/en/2017/10/23/live-video-transmuxing-transcoding-f-fmpeg-vs-twitch-transcoder-part-ii-4973f475f8a3/

RTMP: Real Time Messaging Protocol

RTMP is a TCP-based protocol which maintains persistent connections and allows low-latency communication. To deliver streams smoothly and transmit as much information as possible, it splits streams into fragments, and their size is negotiated dynamically between the client and server. Sometimes, it is kept unchanged; the default fragment sizes are 64 bytes for audio data, and 128 bytes for video data and most other data types.

RTMP streaming was the most popular live streaming for a while but came with a major issue: the Flash video player, an essential piece of the puzzle, was not compatible with mobile streaming. When mobile viewing started to eclipse computer viewers on the internet, this became a problem.

So other protocols, like HLS are used for live streaming delivery.

However, RTMP ingest is still the standard use of RTMP in live streaming. RTMP ingest is valuable for broadcasters because it supports low-latency streaming and it is made possible by low-cost RTMP encoders.

Pros & cons

- 🟢 pros

- multicast support

- low buffering

- wide platform support

- easiest to implement (both server & clients)

- 🔴 cons

- old codecs

- somewhat low security

- must use RTMPS or RTMPE or a variant

- relatively high latency (2 to 5s)

Sources:

RTSP: Real Time Streaming Protocol

Real-Time Streaming Protocol (RTSP) was one of the favorite video technologies in the streaming world before RTMP which is not supported anymore and the HTML5 protocols that are currently breakthrough technology in the streaming world.

RTSP is still one of the most preferred protocols for IP cameras. It also remains the standard in many surveillance and closed-circuit television (CCTV) architectures.

Pros & cons

- 🟢 pros

- low-latency and ubiquitous in IP cameras

- 🔴 cons

- not optimized for quality of experience

- not scalable

Sources:

WebRTC

WebRTC (Web Real-Time Communication) is a technology that allows Web browsers to stream audio or video media, as well as to exchange random data between browsers, mobile platforms, and IoT devices without requiring an intermediary.

But sometimes this would not be sufficient because there are possibilities where some users might face connectivity issues because of different IP networks where Firewalls and NATs (Network Address Translators) could include specific network policies that will not allow RTC communications.

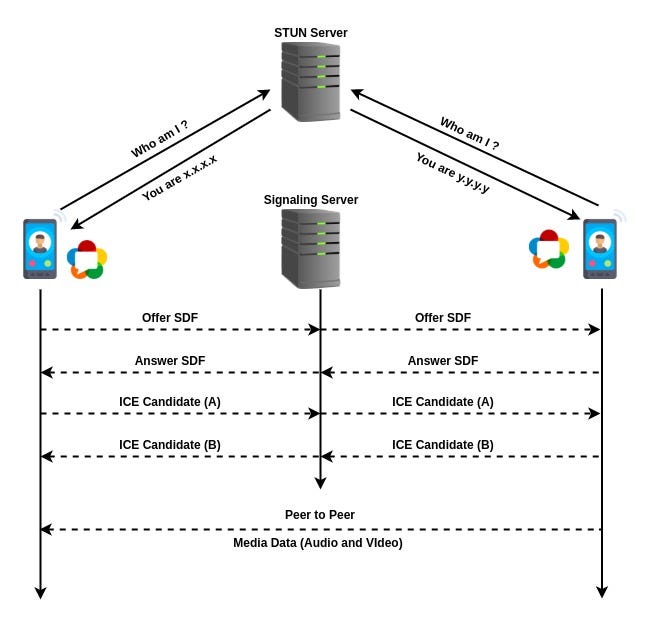

In order to solve this kind of network connection scenario, we need to use ICE (Interactive Connectivity Establishment) protocol and it defines a systematic way of finding possible communication options between a peer and the Video Gateway (WebRTC).

ICE (INTERACTIVE CONNECTIVITY ESTABLISHMENT) is a protocol used to generate media traversal candidates that can be used in WebRTC applications, and it can be successfully sent and received through Network Address Translation (NAT)s using STUN and TURN.

STUN

STUN (Session Traversal Utilities for NAT) that complements ICE through NATs using UDP protocol. STUN allows applications to discover the presence and types of NATs and firewalls between them and on the public Internet. It can be used by any device to determine the IP address and port allocated to it by a NAT.

Typically A STUN client can send messages to the STUN server to get the Public IP and ports information then STUN server retrieve that information. Using this Public IP and Port information clients will make a peer to peer communication through the internet.

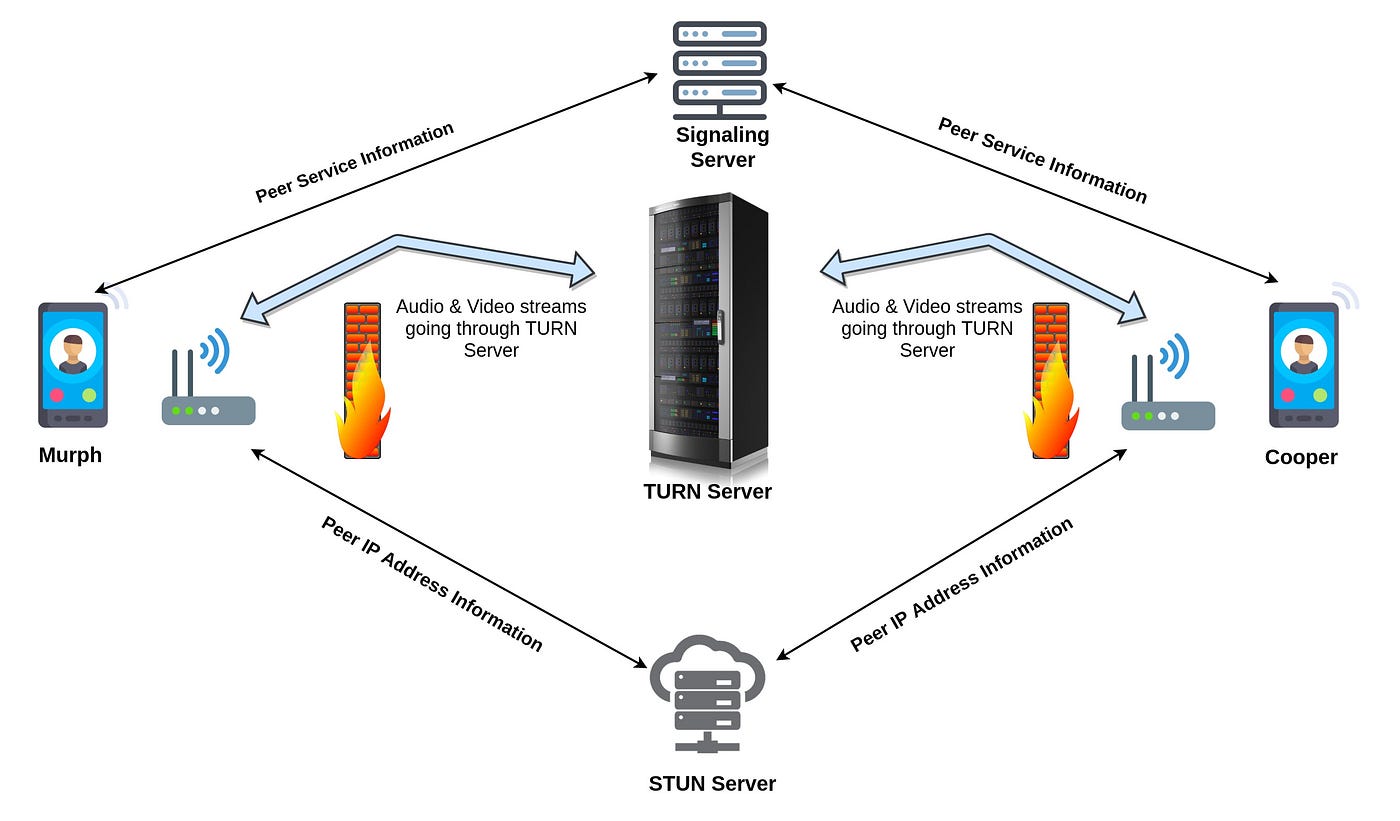

TURN

TURN (Traversal Using Relays around NAT) is a protocol that assists in the traversal of network address translators (NAT) or firewalls for webRTC applications. TURN Server allows clients to send and receive data through an intermediary server. The TURN protocol is the extension to STUN.

In a few cases, client communication endpoints are stuck behind different types of NATs, or when a symmetric NAT is in use, it may be easier to send media through a relay server and its called the TURN server.

Typically A TURN client first sends a message to a TURN server to allocate an IP address and port on the TURN server. Once the allocation has succeeded, the client will use the IP address and port number to communicate with peers. The TURN packet contains the destination address of the peer, then converts these packets as the UDP protocol packet and sends this to the peer.

TURN is most useful for Web, Mobile and IoT clients on networks masqueraded by symmetric NAT devices. But the TURN server cost is high because of the server utilization and huge bandwidth usage in the case where more client connections are established.

Pros & cons

- 🟢 pros

- WebRTC is low latency (~0.5s) and designed for peer-to-peer connections

- secure by design

- 🔴 cons

- ⚠️ dynamic port from 5000 to 65000 (UDP & TCP)

- we may need to deploy a TURN server to mitigate this port issue

- ⇒ performance may be impacted if the live stream goes through an intermediate server

- if we also want to use WebRTC as output

- it might quite a challenge to synchronize the video live stream with the signals live stream

- overall complex network workflow

- network issue can occur in any service (STUN, TURN, signaling server, peer-to-peer, …)

- ICE processing (i.e. “call setup delay”) may take some time (can be up to ~10s according to some)

- can be mitigate by using Trickle ICE

Sources:

- What is WebRTC and how to setup STUN/TURN server

- How does WebRTC work

- Get started with WebRTC

- STUN, TURN, and ICE NAT Traversal protocols

- ICE always tastes better when it trickles

- Why WebRTC is a good option to implement in comparison with RTMP

- Du peer-to-peer à traver un NAT, un proxy ou un firewall grâce à WebRTC, STUN et TURN

HLS

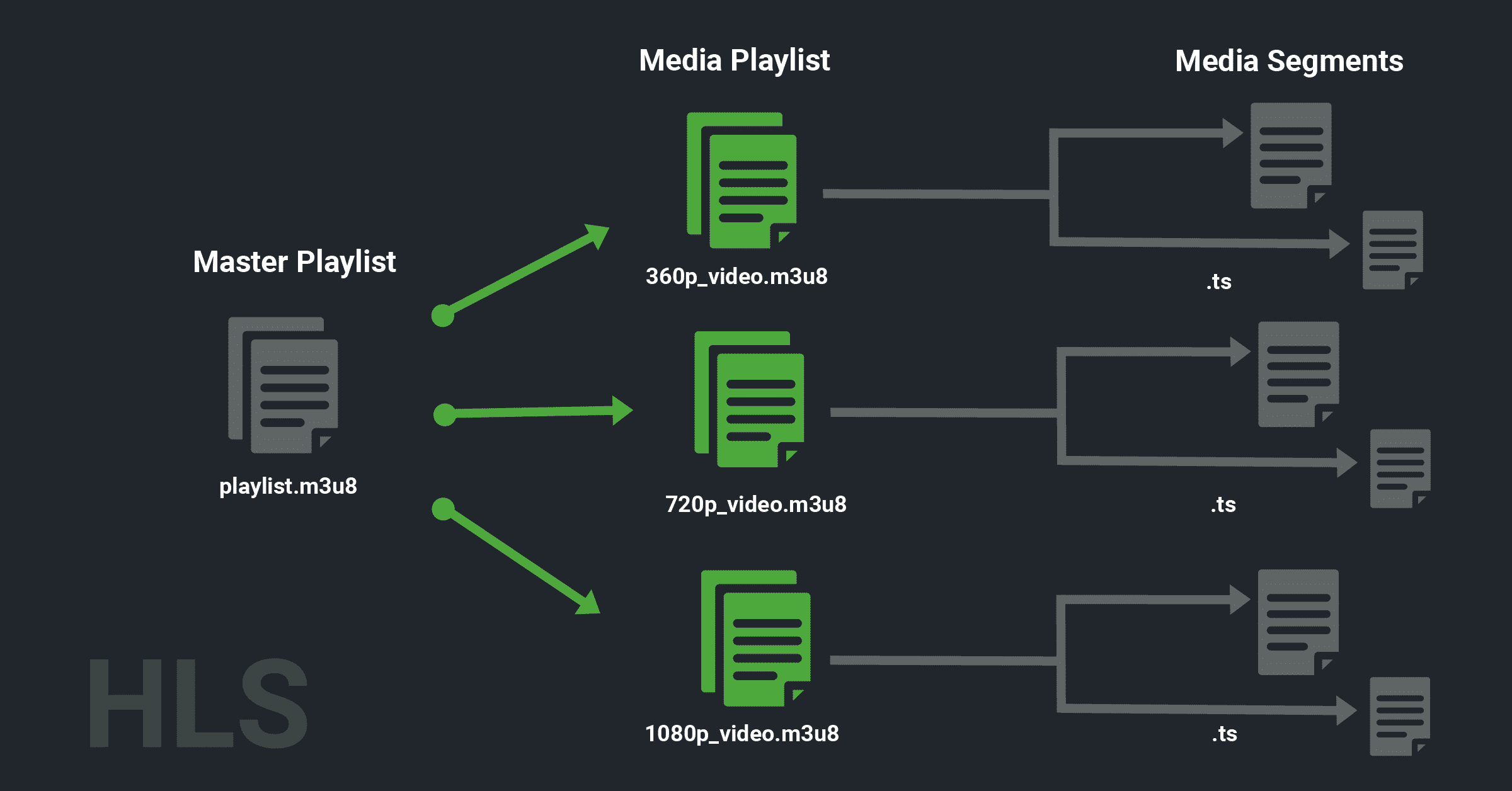

HLS uses a mechanism known as Adaptive Bitrate Streaming to deliver high-performance videos to all devices. It sounds fancy, but the principle here is very basic.

Imagine a 1080p video is uploaded to an online server. Now, a viewer wants to watch this on his phone that has a screen resolution of less than 1080p. Flash player would play the original video without modifying it and thus causing it to buffer.

A similar problem would occur when a video with a smaller resolution is played on a high-resolution screen. In this case, the video will pixelate and result in poor viewing quality and experience.

Adaptive Bitrate Streaming is an elegant solution to all these problems. It splits the original video into 10-second segments and creates several versions of each piece. Then it plays the video according to the viewer’s preferences, internet speed, and the device used.

For instance, it will play a low-quality video to a viewer with a smaller screen and slow internet but will play the same video in high-resolution to a viewer with a large screen and fast internet.

Furthermore, Adaptive Bitrate Streaming enables automatic quality change in real-time as the viewer is watching the video. If internet speed suddenly drops, it will automatically decrease the quality of the video. The goal here is to provide a seamless and uninterrupted viewing experience to the viewer.

HLS is extremely helpful for users who often face fluctuating internet speeds and desire a smooth video quality. Moreover, HLS also allows for closed captions embedded in the video.

Example of master playlist content

#EXTM3U

#EXT-X-STREAM-INF:BANDWIDTH=1280000,AVERAGE-BANDWIDTH=1000000

http://example.com/low.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=2560000,AVERAGE-BANDWIDTH=2000000

http://example.com/mid.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=7680000,AVERAGE-BANDWIDTH=6000000

http://example.com/hi.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=65000,CODECS="mp4a.40.5"

http://example.com/audio-only.m3u8Example of media playlist content

#EXTM3U

#EXT-X-TARGETDURATION:10

#EXT-X-VERSION:3

#EXTINF:9.009,

http://media.example.com/first.ts

#EXTINF:9.009,

http://media.example.com/second.ts

#EXTINF:3.003,

http://media.example.com/third.ts

#EXT-X-ENDLISTSources:

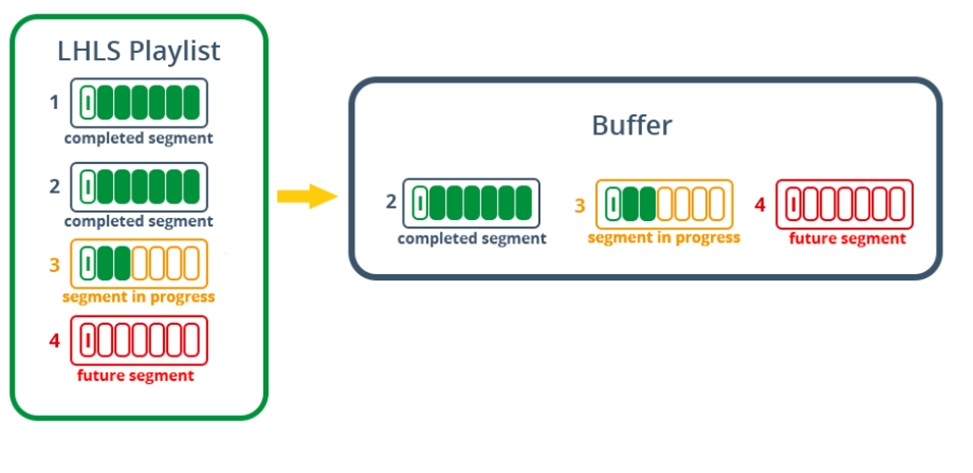

LL-HLS

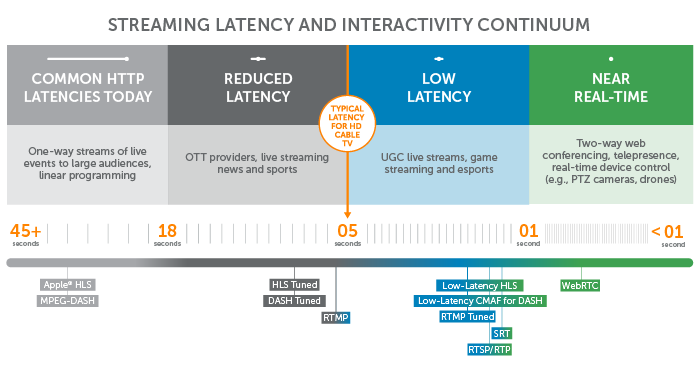

As latency has gained importance over the last few years, HLS was extended to LL-HLS.

Low Latency HLS, aims to provide the same scalability as HLS, but achieve it with a latency of 2-8s (compared to 24-30 second latencies with traditional solutions). In essence, the changes to the protocol are rather simple.

- A segment is divided into “parts” which makes them something like “mini segments”. Each part is listed separately in the playlist. In contrast with segments, listed parts can disappear while the segments containing the same data remain available for a longer time.

- A playlist can contain “Preload Hints” to allow a player to anticipate what data must be downloaded, which reduces overhead.

- Servers get the option to provide unique identifiers for every version of a playlist, easing cacheability of the playlist and avoiding stale information in caching servers.

Media playlist content example