Service Architecture at SoundCloud

Part 1: Backends for Frontends

src: Service Architecture at SoundCloud — Part 1: Backends for Frontends - 2021-07-29

This article is part of a series of posts aiming to cast some light onto how service architecture has evolved at SoundCloud over the past few years, and how we’re attempting to solve some of the most difficult challenges we encountered along the way.

Backends for Frontends

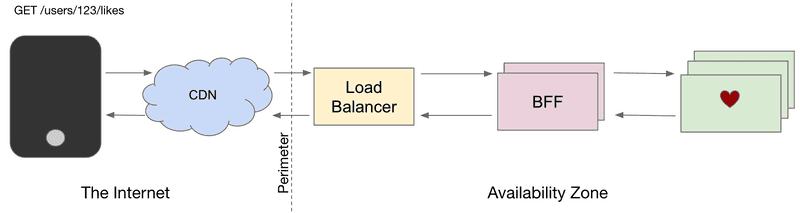

SoundCloud pioneered the Backends for Frontends (BFF) architectural pattern back in 2013 while moving away from an exhausted model of an eat-your-own-dog-food approach. The exhausted model involved using a single API (the Public API) both for official applications and third-party integrations. But the need to scale operationally and organizationally led to a migration from a monolith-based architecture to a microservices architecture. The proliferation of new microservices, paired with the introduction of a private/internal API for the monolith (effectively turning the monolith into yet another microservice), opened the door for new and innovative dedicated APIs to power our frontends. Thus, BFF was born, and it was really exciting, as it enabled autonomy for teams — along with many other advantages that will be discussed shortly.

In a nutshell, BFF is an architectural pattern that involves creating multiple, dedicated API gateways for each device or interface type, with the goal of optimizing each API for its particular use case.

Plenty has been written about BFF, the theoretical advantages that it provides, and how it compares to other approaches and technologies like centralized API gateways and GraphQL. However, little can be found on real-life experiences, risks, and tradeoffs encountered along the way, so we decided to write this series to shed some light on these topics.

BFFs at SoundCloud in 2021

SoundCloud operates dozens of BFFs, each powering a dedicated API. BFFs provide API gateway responsibilities, including rate limiting, authentication, header sanitization, and cache control. All external traffic entering our data centers is processed by one of our BFFs. Combined, they handle hundreds of millions of requests per hour.

BFFs make use of an internal library providing edge capabilities, as well as extension points for custom behavior. New library releases are semi-automatically rolled out to all BFFs within hours.

Some examples of BFF include our Mobile API (powering Android and iOS clients), our Web API (powering our web frontends and widget), and our Public and Partner APIs.

BFFs are maintained using an inner source model, in which individual teams contribute changes, and a Core team reviews and approves changes based on principles discussed in a collective. The Collective, organized by a Platform Lead, meets regularly to discuss issues and share knowledge.

The Good

One of the key advantages BFFs provide is autonomy. By having separate APIs per client type, we can optimize our APIs for whatever is convenient for each client type without the need for synchronization points and difficult compromises. For example, our mobile clients tend to prefer larger responses with a high number of embedded entities as a way to minimize the number of requests and to leverage internal caches, while our web frontend prefers finer-grained responses and dynamic augmentation of representations.

Another advantage of BFFs is resilience. A bad deploy might bring down an entire BFF in an availability zone, but it shouldn’t bring down the entire platform. This is in addition to many other resilience mechanisms in place.

Additionally, high autonomy and lower risk lead to a high pace of development. Our main BFFs are deployed multiple times per day and receive contributions from all over the engineering organization.

Finally, BFFs enable the implementation of sometimes ugly but necessary workarounds and mitigation strategies (a client bug fix affecting specific versions) without affecting the overall complexity of the platform.

The Bad

BFFs provide many advantages, but they can also be a source of problems if they’re not part of a broader service architecture that’s able to keep complexity and duplication at bay.

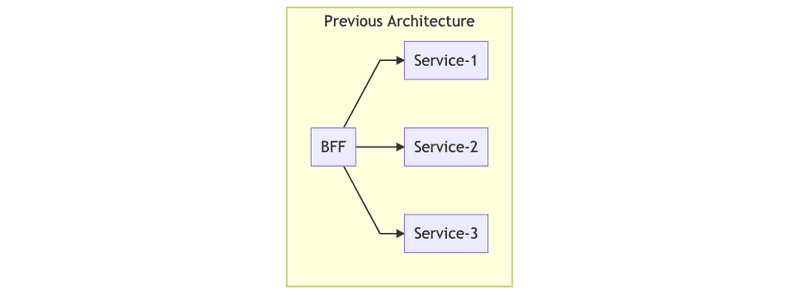

In service architectures with very small microservices that do little more than CRUD, and with no intermediate layers between these microservices and BFFs, feature integration (with all the associated business logic) tends to end up in the BFFs themselves. Although this problem also exists with other models, like centralized API gateways, it’s particularly problematic in architectures with multiple BFFs, since this logic can end up duplicated multiple times, with diverging and inconsistent implementations that drift apart over time.

This issue becomes critical for authorization rules that can only be applied at integration time (for example, because the necessary pieces of information required to make a decision are spread across multiple microservices). This model obviously doesn’t scale with the addition of more and more BFFs.

At SoundCloud, this problem manifested as the Track and Playlist core entities grew and were decomposed into multiple microservices serving parts of the final representations assembled in each of the BFFs. Suddenly, the authorization logic needed to be moved to the point of integration, which, at the time, was the BFF. This was not too concerning at first, with just a handful of BFFs and very simple authorization logic, but as the logic grew in complexity and the number of BFFs increased, it caused many problems. This will be the focus of the next posts in this series.

The Ugly

Effective operation of multiple BFFs requires a set of platform-wide capabilities that, in their absence, might lead to an unnecessary proliferation of BFFs. For example, application entitlements (in addition to user entitlements) are needed to restrict access to certain applications and third-party integrations to specific endpoints. In their absence, it’s tempting to spawn an entire new BFF for narrow use cases with specific access control requirements. There needs to be a strategy to decide how many BFFs are too many and when to create one versus when to reuse an existing one. Even though BFFs are designed to provide autonomy, there’s a tradeoff between autonomy and added maintenance and operational overhead that needs to be carefully managed.

We’ve also seen a tendency to push complex client-side logic to the BFF. This stems from the initial idea that a BFF is an extension of the client, and that therefore it should be treated as “the backend side of the client.” This has worked well in some cases, but in others it has led to problems. For example, pushing pagination to the server (recursively paginating to return an entire collection in one single request) — even though faster for basic use cases — can lead to timeouts, restrictive limits for collection sizes, and fan-out storms that may bring the entire system down.

Although BFFs enable some form of autonomy, it’s also important to recognize that BFFs are at the intersection of two worlds, and the idea of full autonomy for client developers is just an illusion. Extensive collaboration between frontend and backend engineers is required to ensure optimal API designs that are convenient for client developers to use, in addition to being optimized for distributed environments and their intricacies.

Summary

Backends for Frontends is an architectural pattern that can lead to a high degree of autonomy and pace of development. Like all engineering decisions, it comes with a set of tradeoffs that must be well understood and managed. In particular, a good service architecture is critical for scalability, security, and maintainability, and there are limits to how much autonomy can be achieved.

In future posts, we’ll dive into some of the unintended consequences of using the BFF pattern and discuss how our service architecture has evolved to address them. Stay tuned!

Part 2: Value-Added Services

src: Service Architecture at SoundCloud — Part 2: Value-Added Services - 2021-08-20

This article is part of a series of posts aiming to cast some light onto how service architecture has evolved at SoundCloud over the past few years, and how we’re attempting to solve some of the most difficult challenges we encountered along the way.

In the first installment, we covered the use of the BFF pattern within SoundCloud, detailing its pros and — more significantly — its cons. While the BFF architecture comes with many benefits, such as optimizing backends suited for different clients and a higher level of resilience than a shared single backend, its implementation at SoundCloud became problematic over time. Unnecessary complexity and duplicate code developed. Even worse, we had business and divergent authorization logic living in each of the BFFs, which is a dangerous pattern, as the maintenance and synchronicity of this code is of paramount importance. It became clear that we needed a different approach: Enter Value-Added Services (VAS).

Value-Added Services

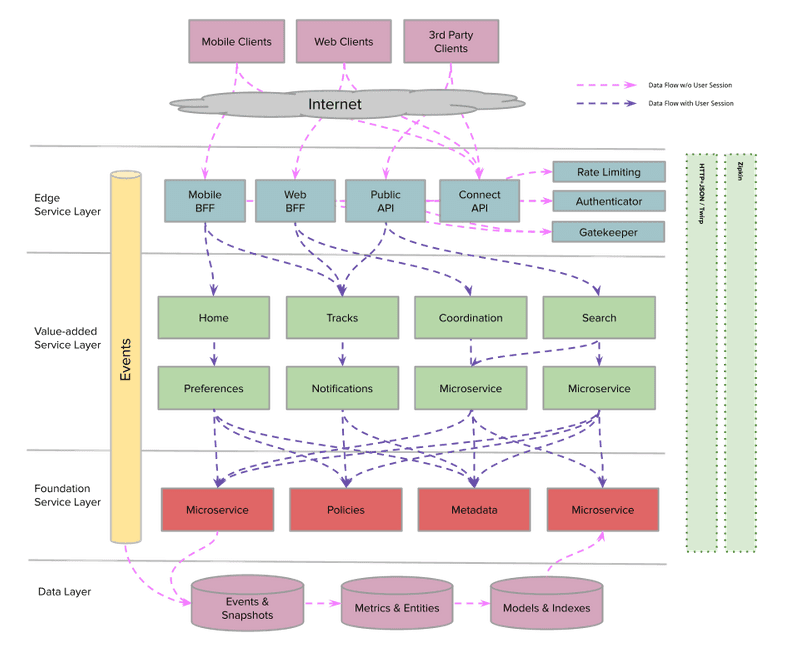

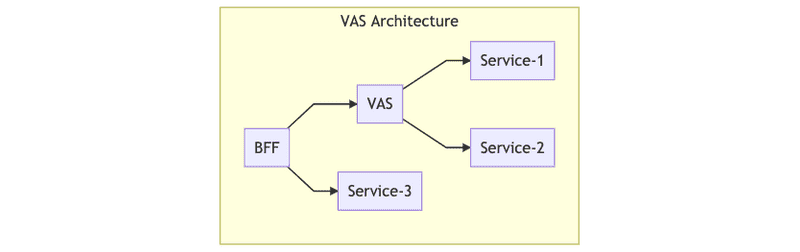

First, let’s cover the different service layers at SoundCloud that make up this architecture.

- Edge: This layer provides API gateway capabilities and is where our BFFs live. The BFFs are published and maintained dedicated APIs that are tailored to specific client needs.

- Value Added: Services in this layer consume data from other services and process them in some way to build rich experiences for users.

- Foundation: This is a low-level service that provides the building blocks around a domain.

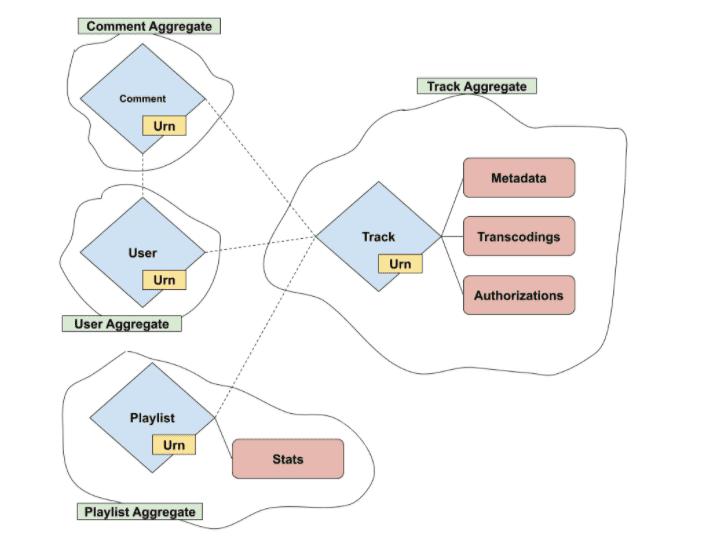

It’s also important to understand the building blocks that come together in Value-Added Services. These are all well-known domain-driven design concepts, which you can read more about in this article.

- Domain: A user or business concern that can be used to draw boundaries/scope around service integrations.

- Entity: An entity is an object that has an independent identifier and a lifecycle.

- Value Objects: Value objects contain metadata related to a given entity; they’re also tied to the lifecycle of the given entity.

- Aggregate: An aggregate is a collection of one or more related entities. An aggregate has a root entity called the aggregate root. Aggregates can also contain references to other entities, but not the referenced entity metadata. It’s then up to the consuming services to call other services to synthesize the entity references.

Value-Added Services are business services responsible for returning an entity and its associated value objects (in other words, an aggregate) to the caller. It’s important to note that a VAS is not responsible for synthesizing metadata for any associated entities. This allows for a nice separation of concerns — along with a centralized point where metadata and authorization rules for a given entity can be defined. A VAS can then orchestrate calls to these services to synthesize and authorize aggregates to then return to the BFF.

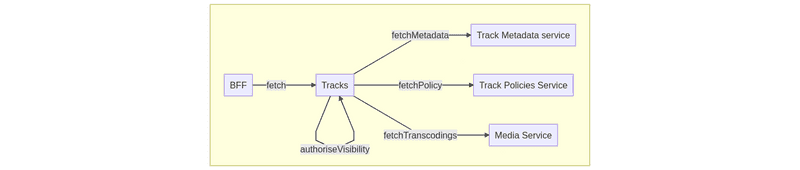

Let’s apply these concepts to real-life examples at SoundCloud. An example entity is a track, which has associated value objects like metadata, transcodings, and authorization policies to determine visibility. A track is also connected to an owning user, but since this is another entity, it only contains the user ID as a reference. If a consuming service has a track ID it wants to resolve, it’ll then call the Tracks VAS, which takes care of ensuring that the track is visible, and then it returns the according track aggregate.

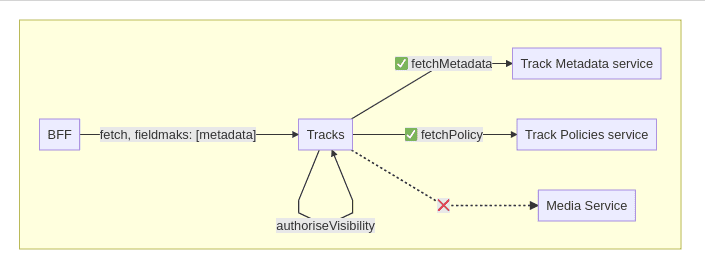

Previously, if an end user wanted to fetch a track, the request would be sent to the BFF. It would then be up to the BFF to determine whether the session user was authorized to view this track and, if so, to synthesize the external track representation to return back to the user. This would involve calls to various Foundation services that are individually responsible for returning both authorization information and track metadata.

However, once the Tracks VAS was introduced, this pattern changed. All the duplicate logic surrounding calls to Foundation services in the BFFs was moved to the Tracks service, which now took care of synthesizing track aggregates for the BFFs, in addition to handling context-specific track visibility and authorization. The BFF was then responsible for mapping those internal track aggregates to external representations for the clients to consume.

Of course, nuances in how the BFFs behave in fetching tracks remains, but all shared code now lives in a singular codebase. Integrations requiring track aggregates are now as simple as querying endpoints exposed from the tracks VAS, removing the need to reorchestrate calls to Foundation services, and maintaining a guarantee that authorization is properly taken care of.

Evolution of VAS at SoundCloud

Now that we’ve explained the basis of VAS and how we integrated it into SoundCloud for tracks, we’d like to share how we adapted the same architectural pattern for the case of playlists. We’ll also outline the challenges we encountered during the process of evolving our architecture toward a VAS landscape.

As we already discussed, in 2019, we started the development of a Tracks service using the concept of a VAS. That was the first implementation of such a concept in the organization, and it helped us validate a model to apply to the rest of our entities. In 2020, we began a major refactoring of our Public API. The codebase of the Public API was divided between the Public API component of the Mothership monolith and the Public API BFF, which is a facade of the Public API. The refactor involved migrating all endpoints from Mothership and rewriting them in the Public API BFF.

Rewriting all track-related endpoints (all the endpoints that were returning the representation of tracks) was an easy task, as we already had a Tracks VAS up and running, so we just needed to connect the Public API with the Tracks service.

However, we also needed to rewrite all the playlist-related endpoints, but we didn’t have such a VAS. So we had to decide whether to duplicate existing authorization and fetching logic from other BFFs and move it to the Public API, or to create a new Playlists VAS to be the central service to handle fetching playlists logic and to make the Public API BFF dependent on it. The latter solution was, for us, the most attractive, as it would require refactoring and cleaning up the rest of the BFFs to also use the Playlists VAS to handle the many different playlists-related endpoints.

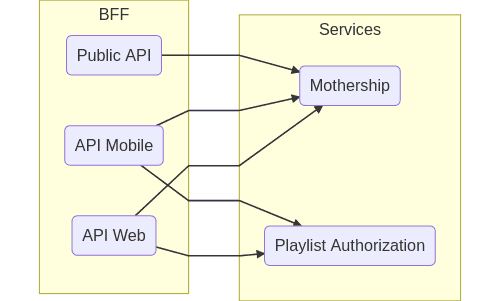

The following graph illustrates the process of migration:

As you can see, the main SoundCloud APIs were calling directly to the Mothership (the original SoundCloud app which is the main interface to our databases) and to Playlist Authorization (a system that authorizes users according to business rules of tracks in a playlist). This summarizes the two main problems of this approach: duplication of logic in the BFFs, and fragile authorization.

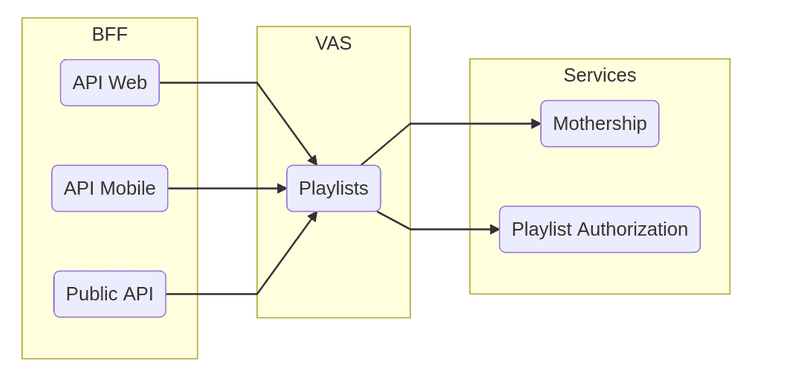

The following graph shows our solution:

With this architecture, all the logic is centralized in the Playlist VAS.

This project was divided into the following steps:

- Extracting the logic from the BFFs and creating a new Playlist VAS. We investigated and documented all the different implementations of playlists logic in our main services to come up with the base logic for playlist-fetching logic. Once we documented all the information needed and collected feedback from the rest of the organization, we implemented the service.

- Automatic tests to ensure that centralized logic matches refactored services. We added unit tests to ensure that we covered all the possible scenarios of fetching and authorization logic for playlists. We also added integration tests to ensure that response formats with clients weren’t broken, and that integrations with dependent services were working properly.

- Migrating the BFFs to use the Playlists VAS. This might seem like an easy step, but it actually took us longer than implementing the service itself. We had to migrate more than 50 playlist-related endpoints from the main BFFs. We did this endpoint by endpoint, and by carefully comparing responses from both implementations, as we didn’t want to break anything from the clients of the BFFs.

What We Learned

VAS comes with many benefits, and it was a logical solution for the problems seen with playlist resolution at SoundCloud. The first and perhaps most obvious mitigation was that a Playlists VAS reduces duplicated playlists code in the BFFs. Each BFF contained the same logic for orchestrating various calls to dependent services for resolving playlist metadata, but with a VAS, we could congregate this logic in one service. Having a centralized service containing business logic means refactoring and optimizations are easier and faster. This also helps simplify cross-platform feature development, as value objects — in this case, playlists — are only exposed in a central place. So it becomes trivial to the rest of the clients to access playlists instead of reimplementing that functionality in each BFF.

One of the most critical parts of fetching playlists was authorization: Leaking private or unreleased tracks to the general public is a worst-case scenario we want to avoid at all costs. Authorization logic was spread out over multiple services, increasing the risk of inconsistencies (and therefore vulnerabilities) sneaking in over time. The Playlists VAS means having one central codebase for authorization logic, which can be audited easily.

It’s worth noting here that VAS-to-VAS communication can occur in some circumstances. Let’s say, for example, that a request is sent over to the Playlists VAS to add a track to a given playlist. Before we continue with this write command, we must first check that the track is visible to the session user. Authorization logic for entities is centralized in the VAS, so we’d have to make a request to the Tracks service to determine whether the requested track is authorized to be added. In this case, we ensured that the previous logic for tracks that has depended on playlists was decoupled, meaning we can have VAS-to-VAS communication without circular dependencies.

Considerations

The migration of entities to their own services has as many benefits, as outlined above. However, implementing this solution has downsides as well, including:

- Adding a new service to our systems. Creating a new VAS comes with maintenance costs and infrastructure costs. We need to provision new nodes, deploy the monitoring service, maintain a new codebase, add a new service to our on-call rotation, etc.

- Network latencies. In previous implementations, when BFFs wanted to fetch playlist metadata, they just needed to do a round trip to Mothership and Playlist Authorization. With this new implementation in place, they need to do an extra roundtrip to the Playlists VAS.

In addition to considering downsides, we also considered the alternate solution of having the Playlists VAS as an external library that would be linked during the app build process. This is an approach we used in the past — for instance, with our JVMKit library (you can read more about that here). However, this solution comes with extra complexity and risk, in that different versions of the library could be used in each service, potentially causing a state with diamond dependencies. In our case, the benefits of introducing a VAS far outweigh the tradeoffs.

It’s also worth mentioning that the approach of using a VAS means we can expose new integrations using Twinagle, which is an in-house implementation of the Twirp protocol for Finagle. You can read more about the motivation and benefits behind Twinagle in our related blog post, but one bonus is the added ease of integration and maintainability. Twinagle uses an interface description language (IDL) to generate server stubs and clients, meaning that integrating services have a clearly defined API to work with. Making changes to the VAS therefore becomes safer, given that the API contract stays consistent and will clearly expose any (backward compatible) changes made, with all implementation details being encapsulated behind the API.

Summary

The adoption of Value-Added Services at SoundCloud has been well received. It allows for a cleaner architecture and a better separation of concerns. We’re definitely going to move more entities to their own VAS and extend existing ones to have more operations, all of which allows us to have a clear roadmap for the architecture of our microservices.

In the next blog post — and the last one in this series on the evolution of Service Architecture at SoundCloud — we’ll talk about the next iteration of Value-Added Services and how they evolved into Domain Gateways.

Part 3: Domain Gateways

src: Service Architecture at SoundCloud — Part 3: Domain Gateways - 2021-09-17

This article is the last part in a series of posts aiming to cast some light onto how service architecture has evolved at SoundCloud over the past few years, and how we’re attempting to solve some of the most difficult challenges we encountered along the way.

In the second part of this series, we discussed how we evolved the use of the BFF pattern in SoundCloud by moving existing duplicated logic to a more centralized and elegant solution called Value-Added Services (VAS). We covered how we benefit from this architecture pattern, as we have all the authorization, content policies, and fetching logic of tracks and playlists in a single service.

In this blog post, we’ll cover how we evolved the concept of Value-Added Services to Domain Gateways, which allow us to extend those services to have read and write operations in a single and centralized service for each business domain.

Growing Aggregates

As we described in the previous installment, the core responsibility of a VAS is to serve our core aggregates, such as Track and Playlist. To do this, a VAS fetches states for associated entities and value objects from corresponding Foundation1 services, and then it applies business authorization rules. For example, the Tracks VAS will filter out all tracks that are geoblocked in certain territories.

Roughly speaking, one can imagine a VAS as a big fanout together with authorization logic. One of the first challenges that we faced while extending our Value-Added Services was the size of this fanout. As we were adding features to the platform, our aggregates — and hence the amount of network calls — were growing as well.

On the other side, our BFFs often have different needs dictated by their applications. For example, one track feature might only be available on mobile, which makes fetching the entire track aggregate from the Web API unnecessary. Moreover, even within a single BFF, we sometimes support multiple aggregate representations that can be built without fetching all dependencies.

How can we provide centralized endpoints for serving aggregates that can be customized to the specific needs of BFFs? Luckily, this problem has a pretty straightforward solution — partial responses. This pattern allows API consumers to tell the producer which part of the response they’re going to consume by specifying a FieldMask in the request. Field masks support protobuf and JSON representations that make them essentially protocol agnostic.2

In our particular case, we use Twinagle — a protobuf IDL based on the Twirp protocol. Protobuf definitions provide type-safe construction and validation out of the box via FieldMaskUtils that we’ve ported to the ScalaPB library.

One disadvantage of field masks for partial responses is a tighter coupling between microservice topology and aggregate schemas (IDLs). Field masks can be defined according to service dependencies and network calls to reduce the number of requests necessary to produce a BFF representation. At SoundCloud, our focus is more on the reduction of complexity in the edge layer (specifically in BFFs). While field masks can optimize network calls as well, it isn’t necessary to have a 1:1 mapping between field masks and network calls.

Commands

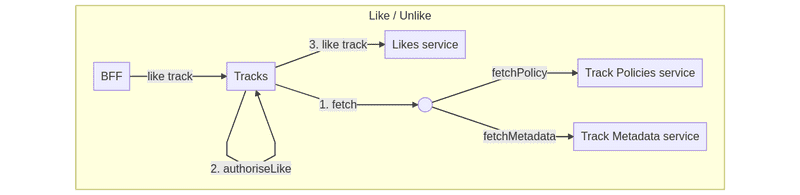

While we were extending the scope of the VAS to serve aggregates of our entities, we identified that we could also extend our VAS to those actions that mutate the state of the core entity (i.e. write operations) but at the same time would require authorization logic. To centralize even more core entities, we extended our VAS with commands. Some examples of these command operations in the Tracks domain include “download a track,” “like a track,” and “repost a track.”

Since it’s an operation that lives in the VAS, it also has the benefit that we reduce complex logic in BFFs (in case such logic was duplicated there) and improve reliability in terms of access logic of those operations that require grant access to a given track.

We can illustrate the case of liking a track in the Track VAS:

As we can see in the graph, BFFs would send a request to the Tracks service to perform a track operation. The service that usually registers “like” operations lives in the Likes service. This service isn’t aware of track authorizations; it only creates/deletes links between tracks/playlists and users. That’s why we need to check first if the user who wants to like a track has access to it. The best place to achieve such logic in a centralized place is the Tracks VAS.

Separation of Queries and Commands

To summarize, the VAS interface consists of two parts: an endpoint to serve its aggregate according to BFF needs, which we call queries; and endpoints that expose core entity operations, which we call commands. This separation is the core idea behind the CQRS pattern and provides some practical benefits, as it’s possible to provide separate upstream services or stores for read and write operations. For example, the foundational service that provides operations to add or remove a follow/er/s relationship between two users (a write) is different from the service that serves follower counts. This relationship between foundational services is now abstracted away from users of the Tracks VAS, which improves consistency and reduces complexity in the BFFs.

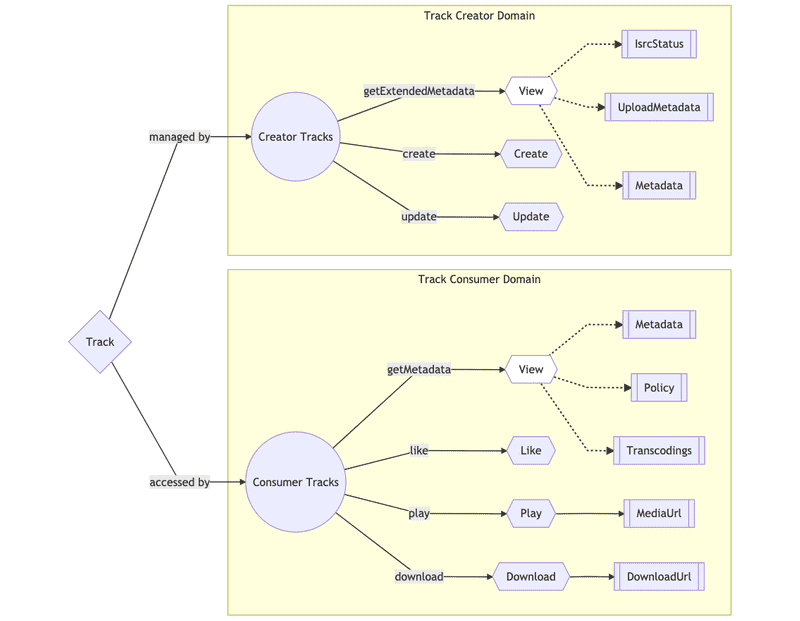

Beyond Core Entities: Domain Gateways

As our VAS grew in scope, we identified that a single core entity (like a track) can be used in different domains, for different purposes, and with different access patterns and authorization requirements. For example, SoundCloud not only provides a consumer application to a music catalog; it also provides tools for creators to upload and distribute their music. Consumer and Creator are different domains, owned by different teams — all of them referencing and using tracks for different purposes within their specific domains.

A possible approach in this case is to implement everything that can possibly be done with a track (in all the different domains) — including related queries and commands — in a single VAS. This can work well for some time, but eventually there’s a risk of creating large amounts of coupling and complexity, causing friction and decreasing productivity.

A more scalable approach is to identify the different business domains that need to make use of a given entity and create a Domain Gateway for each of them. In essence, a Domain Gateway is an implementation of a VAS tied to a specific business domain. Each one can be maintained by different teams and represent different views on a given entity, relying on the same foundational layer of services. This façade can provide stability and act as an anti-corruption layer for each of the domains.

The Domain Gateway approach involves a certain level of duplication in exchange for autonomy and increased scalability, and it makes sense to apply in cases in which the different domains have very different access patterns and highly disjoint feature sets, or when communication and collaboration between teams is difficult (for example, due to geographic location of teams and non-overlapping time zones).

Summary

As we discussed in the previous blog post, the evolution of SoundCloud’s architecture into a three-tier architecture with Value-Added Services as authoritative entry points for accessing aggregates has proven successful — even more after evolving them into the concept of Domain Gateways. This is a pattern that we’ll continue adopting and applying in the future.

We plan to move other operations that are duplicated in our codebases to their respective gateways. This will provide more flexibility to evolve our system as soon as we want to add new functionality without the hassle of duplication in each of the BFFs.

In parallel, we’ll continue encouraging feature teams to evolve their microservices architecture around their core domain. This will create a more solid landscape where business logic is centralized and more easily accessible from other dependent systems.

Finally, we’re still exploring the possibilities enabled by Domain Gateways — including improved team autonomy and reduced cycle times for our development process.

1: For a review of SoundCloud’s architectural layers, refer to our previous blog post.

2: GraphQL is an alternative approach to provide an API that can be customized to consumer needs. Although it provides more flexibility, we decided that its benefits won’t offset the cost of migration from our standard Twinagle stack.